Six months ago, Greg Isenberg started an experiment. Every single day, he would pair program with AI assistants on real projects. Not toy examples or tutorials, but actual production code that needed to ship. What he discovered wasn't just about AI capabilities or limitations. It was about building systems that actually scale and work reliably when the stakes are real.

The breakthrough came around month three when he realized he was doing AI pair programming all wrong.

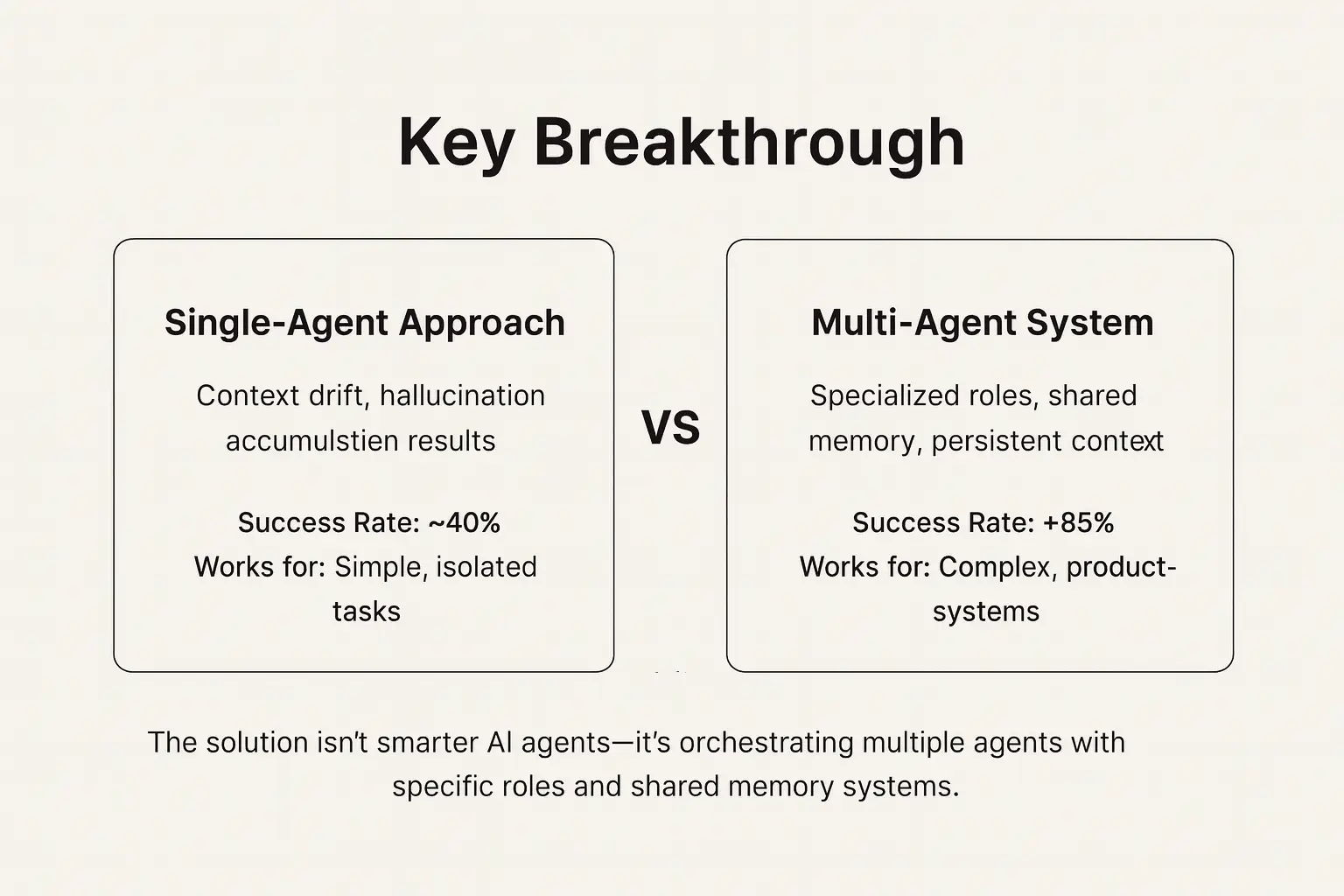

Visualizing the multi-agent AI pair programming workflow

The solution isn't smarter AI agents—it's orchestrating multiple agents with specific roles and shared memory systems.

The Problem with Single-Agent Workflows

Most developers treat AI assistants like super-powered autocomplete. You open ChatGPT or Claude, describe what you want to build, and hope for the best. Sometimes it works brilliantly. Often it doesn't. The problem isn't the AI's intelligence, it's the architecture of how we're using it.

Single-agent workflows break down because of context drift, hallucination accumulation, and the simple fact that complex projects require sustained attention across multiple sessions. When you're building anything non-trivial, you need memory, consistency, and the ability to break down work into manageable chunks.

The Multi-Agent Solution That Changed Everything

The solution came from an unexpected source: a comment from a developer who had been experimenting with orchestrating multiple AI agents. The approach was counterintuitive but brilliant in its simplicity.

Instead of trying to make one AI agent do everything, you create a system where different agents have specific roles, and most importantly, they communicate through a shared memory system. Here's how it works in practice.

Step 1: The Planning Agent

First, you designate one AI instance as your "Central Agent" or planning coordinator. This agent's job isn't to write code, it's to think. You give it your project requirements and ask it to break everything down into small, discrete, actionable tasks. Think user stories, but for AI consumption.

The key insight here is that AI agents are excellent at decomposition when that's their only job. When they're not also trying to write code, debug issues, and remember context from three conversations ago, they can create remarkably detailed and logical project breakdowns.

Step 2: Building the Memory System

This is where most people skip ahead, but it's actually the most critical piece. Before you start executing tasks, you need your Central Agent to construct a memory system. This could be a simple log file, a more structured database, or even just a well-organized markdown document.

Every task attempt, completion, failure, and bug discovery gets logged here. But here's the crucial part: this memory system must correlate directly to the original plan. Each entry should reference specific tasks, include timestamps, and capture both what worked and what didn't.

Step 3: Task Distribution and Fresh Context

Now comes the magic. Your Central Agent creates specific, detailed prompts for individual tasks. These aren't vague requests like "implement user authentication." They're comprehensive briefs that include context, requirements, expected outputs, and success criteria.

You then copy these prompts to fresh AI sessions, preferably using efficient models like GPT-4o mini or Claude Haiku for routine tasks. The beauty of this approach is that each task gets a clean context window. No accumulated confusion, no context drift, just focused execution on a well-defined problem.

Step 4: Logging and Feedback Loop

After each task execution, you update the memory system. Success or failure, bugs encountered, partial completions, everything gets logged. This creates an audit trail and, more importantly, a knowledge base that persists across sessions.

Then you return to your Central Agent with the task log. This agent reviews what happened and either provides follow-up instructions for blocked tasks or generates the next task assignment prompt. The cycle continues.

Why This Actually Works

After three months of refining this workflow, several patterns became clear. First, error margins plummeted. When tasks are small and well-defined, AI agents rarely misunderstand requirements. When they do, the blast radius is contained to a single task, not an entire project.

Second, context drift became a non-issue. Each execution agent starts fresh, with exactly the context it needs. No more "I thought we were building a REST API, not GraphQL" moments halfway through a project.

Third, the system actually gets smarter over time. The memory system captures institutional knowledge. Patterns of what works and what doesn't. Solutions to recurring problems. It becomes a form of organizational memory that persists beyond individual conversations.

The Surprising Benefits

What I didn't expect was how this approach would improve my own development process. When you're forced to think in terms of discrete, well-defined tasks, you naturally write better requirements. The planning phase becomes more thoughtful because you know the execution agents will only be as good as the instructions you give them.

The logging system also creates natural documentation. Every significant decision, every bug encountered, every solution discovered gets recorded. Six months later, I can look back at projects and understand not just what was built, but why specific choices were made.

The Human Element

This isn't about replacing human judgment with AI orchestration. It's about creating a system where human strategic thinking is amplified by AI tactical execution. You're still making the important decisions about architecture, user experience, and business logic. But the tedious work of translating those decisions into working code becomes dramatically more efficient.

The most valuable skill I've developed isn't prompting individual AI agents better. It's learning to think in terms of systems and workflows that can be reliably executed by AI agents.

What's Next

Six months in, I'm convinced this multi-agent approach represents a fundamental shift in how we should think about AI-assisted development. We're not just using AI as a coding assistant; we're building AI-powered development workflows.

For a deeper dive into the practical implementation details and specific tools that make this workflow possible, I've documented the complete technical breakdown in this comprehensive guide on AI pair programming implementation, including the exact prompts and automation scripts that power this multi-agent system.

The next frontier is automation. Instead of manually copying prompts between sessions, we need tools that can orchestrate these workflows directly. The components are all there: planning agents, memory systems, task distribution, and feedback loops. Someone just needs to package it into tooling that any developer can use.

Until then, the manual approach works remarkably well. It takes discipline to maintain the process, but the results speak for themselves. More reliable AI assistance, better project outcomes, and a development workflow that actually scales with project complexity.

The future of AI pair programming isn't about making AI agents smarter. It's about making them work together more effectively.

Credit: @gregisenberg

Conclusion

The breakthrough in AI pair programming isn't about finding the perfect AI model or crafting the perfect prompt. It's about architecting systems where multiple AI agents can collaborate effectively, maintain context across sessions, and build institutional knowledge over time.

This multi-agent approach transforms AI from a sometimes-helpful coding assistant into a reliable development partner. The key is understanding that the future of AI-assisted development lies not in individual agent intelligence, but in orchestrated agent collaboration.

For developers ready to move beyond single-agent limitations, this workflow represents a practical path forward. It requires discipline and systematic thinking, but the payoff in reliability and scalability makes it worth the investment.

Ready to Scale Your AI-Enhanced Development?

Our development teams combine proven multi-agent AI workflows with expert human oversight to deliver production-ready systems. Let's discuss how we can accelerate your next project.

Start Your Project